Generative adversarial network is a type of neural network used to generate fake data that resembles real data. GANs use two networks are used against each other. In most machine learning tasks input is given to the model and model is trained for predictions based on the training data. In GANs a neural network model is used to generate the dataset and another model is used to discriminate the real and fake samples.

GANs in deep learning

GANs are the generative model used to produce the new data set by learning the patterns from training data. The newly generated samples are very similar to real data.

They are commonly used for image generation and style transfer tasks.

Components/Architecture of GANs

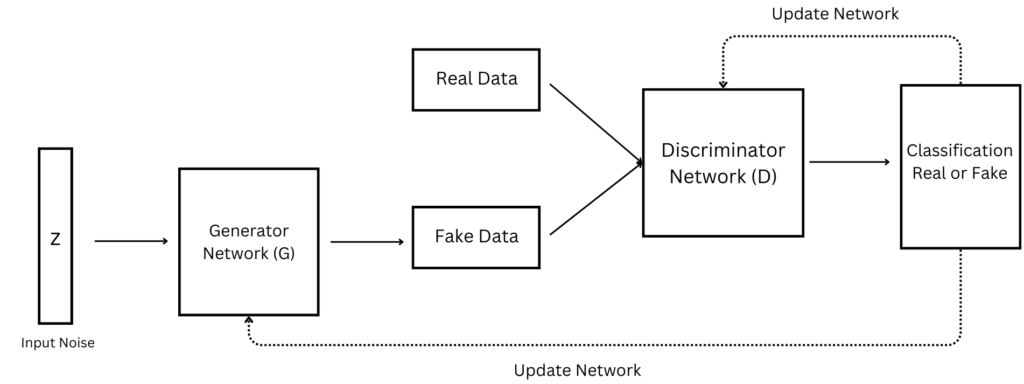

GAN consists of a generator and discriminator neural network:

Generator: The generator is used to produce the fake data samples using the patterns learned from training data and its output is given as the input to discriminator.

- The input to the generator is noise and generates fake data (text or image) from it using the underlying patterns learned from training data.

- The generator network aims to produce realistic data samples that the discriminator does not distinguish as fake.

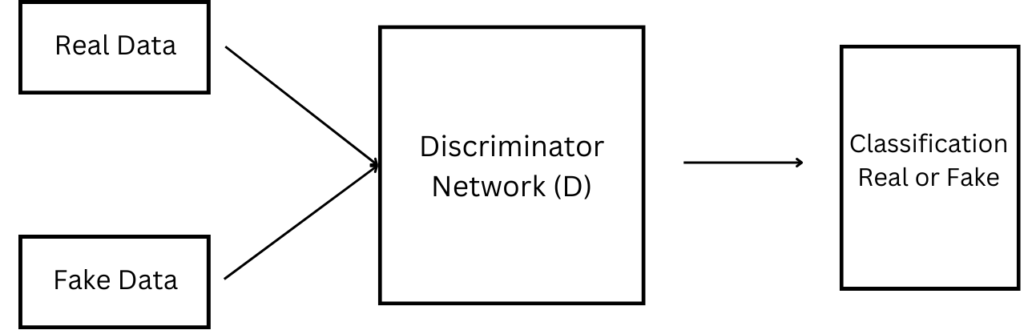

Discriminator: The discriminator network is used to discriminate between the real and fake samples generated by the generator.

- The input to the discriminator network is the real and fake samples generated by the generator.

- During the training process, the network eventually improves itself to distinguish between the real and fake samples.

Working of GANs

The working of GANs is explained in the following steps:

- Initialization of networks: First the generator and discriminator networks are generated and their weights are initialized. Here we represented the generator network with G and the discriminator network with D.

- Fake data generation: In the next step generator takes the random noise z as input and tries to generate realistic data points.

- Discriminator training: In this step, a discriminator is trained using the real and fake samples so that it can distinguish between them. The real samples are labeled as 1 and fake samples are labeled as 0.

- Loss is calculated and both networks are updated using the backpropagation.

Training GANs

The training of a Generative Adversarial Network (GAN) involves a complex interplay between two neural networks: the generator and the discriminator. Here’s a detailed explanation of how GANs work:

1. Initialization:

Generator (G): Initially, the generator network is initialized with random weights. It takes random noise vectors as input and generates fake data samples.

Discriminator (D): The discriminator network is also initialized with random weights. It learns to differentiate between real data samples and fake data samples generated by the generator.

2. Training Process:

- Generating Fake Data: The generator takes random noise z from a latent space as input and generates fake data 𝐺(𝑧).

- Training the Discriminator: The discriminator is trained using a mix of real data samples 𝑥 from the training dataset and fake data samples 𝐺(𝑧) generated by the generator.

- The discriminator’s objective is to correctly classify real data as real (labeled as 1) and fake data as fake (labeled as 0). It is trained using binary cross-entropy loss to update its weights and improve its discrimination ability.

- Training the Generator: The generator aims to generate data that can fool the discriminator into classifying it as real.

- The generator is trained using the discriminator’s feedback, i.e., the discriminator’s loss is backpropagated through the generator to update its weights.

- The generator aims to minimize the binary cross-entropy loss between the discriminator’s predictions on fake data 𝐷(𝐺(𝑧)) and a vector of ones (indicating real data).

- Adversarial Training:

- The training process alternates between training the discriminator (to better distinguish real from fake) and training the generator (to produce more realistic fake data).

- This adversarial training leads to a competitive dynamic where the generator improves its ability to generate realistic data as the discriminator becomes more skilled at distinguishing real from fake data.

3. Convergence:

- Ideally, as training progresses, the generator gets better at producing realistic samples that are difficult for the discriminator to differentiate from real data.

- The discriminator simultaneously improves its ability to distinguish real from fake data.

In an ideal scenario, this competition leads to both networks converging to a point where the generator produces high-quality data samples similar to the training data distribution, and the discriminator is unable to reliably differentiate between real and fake samples.

Real-World Applications

GANs have found applications in various domains, including

- Image synthesis

- Image-to-image translation

- Data augmentation

- Anomaly detection.

- They have also been used to generate synthetic data for training machine learning models when real data is limited or expensive to obtain.