Stride: refers to the step size at which the convolutional filter/kernel moves across the input image or feature map during the convolution operation. It determines how much the filter shifts horizontally and vertically between successive convolutions.

Padding refers to the technique of adding extra zeros around the input image or the feature map before applying the convolution operations. It preserves the spatial dimensions

Types of Stride

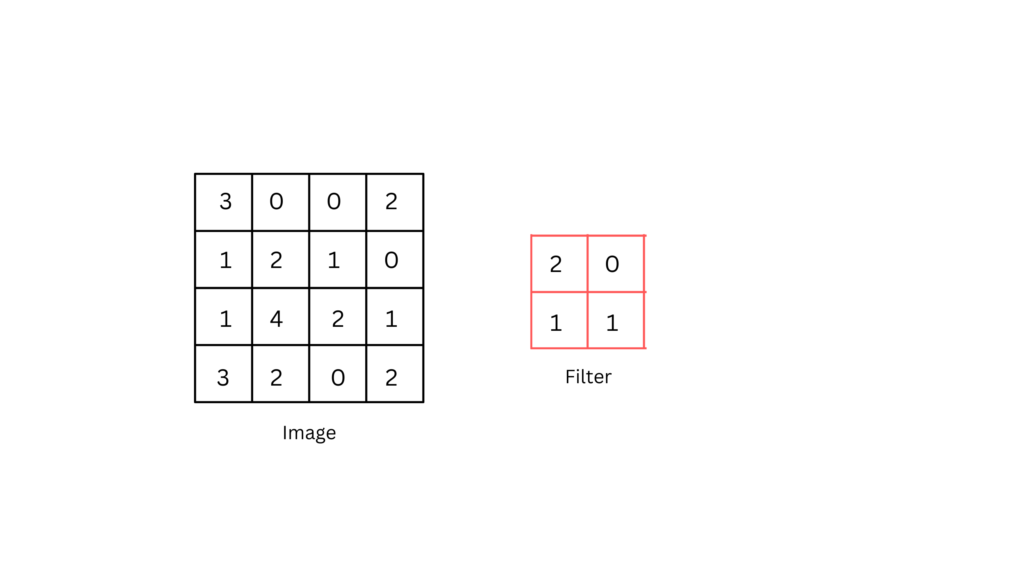

To understand the types of stride let’s take the 4 by 4 image and 2 by 2 filter as shown below.

Stride-1 (No Stride):

In stride-1 convolution, the filter moves one pixel at a time both horizontally and vertically. This is the default setting in many CNN architectures and results in feature maps with spatial dimensions closely related to the input size.

Stride > 1:

When the stride is greater than 1 (e.g., stride-2), the filter skips pixels and moves by the specified stride value. Larger stride values lead to smaller output feature maps due to the increased spatial subsampling.

Impact of Stride

Reduced Spatial Dimensions: Larger stride values lead to reduced output feature map dimensions, which can be advantageous in downsampling and reducing computational complexity, especially in 1deep CNN architectures.

Increased Computational Efficiency: Strides can help in faster processing by reducing the number of computations required per layer, particularly in tasks where fine-grained spatial information is less critical.

Padding

Padding refers to the technique of adding extra layers of pixels (usually zeros) around the input image or feature map before applying convolution operations. This additional padding helps preserve the spatial dimensions of the input and output feature maps, mitigating the issue of shrinking dimensions that can occur during convolution.

Types of Padding

Valid Padding (Zero Padding): In valid padding, no padding is added to the input image or feature map. As a result, the output feature map size reduces with each convolutional layer, especially when using large filter sizes.

Same Padding:

The same padding ensures that the output feature map has the same spatial dimensions as the input. It achieves this by adding the appropriate amount of padding around the input, calculated based on the filter size and stride.