In Natural Language Processing (NLP) stemming and lemmatization are text normalization techniques that reduce the words into their root form. This helps improve text analysis and performance in various NLP tasks such as information retrieval and text mining. This article explains both techniques in detail, their application and limitations, and their implementation using Python.

Stemming

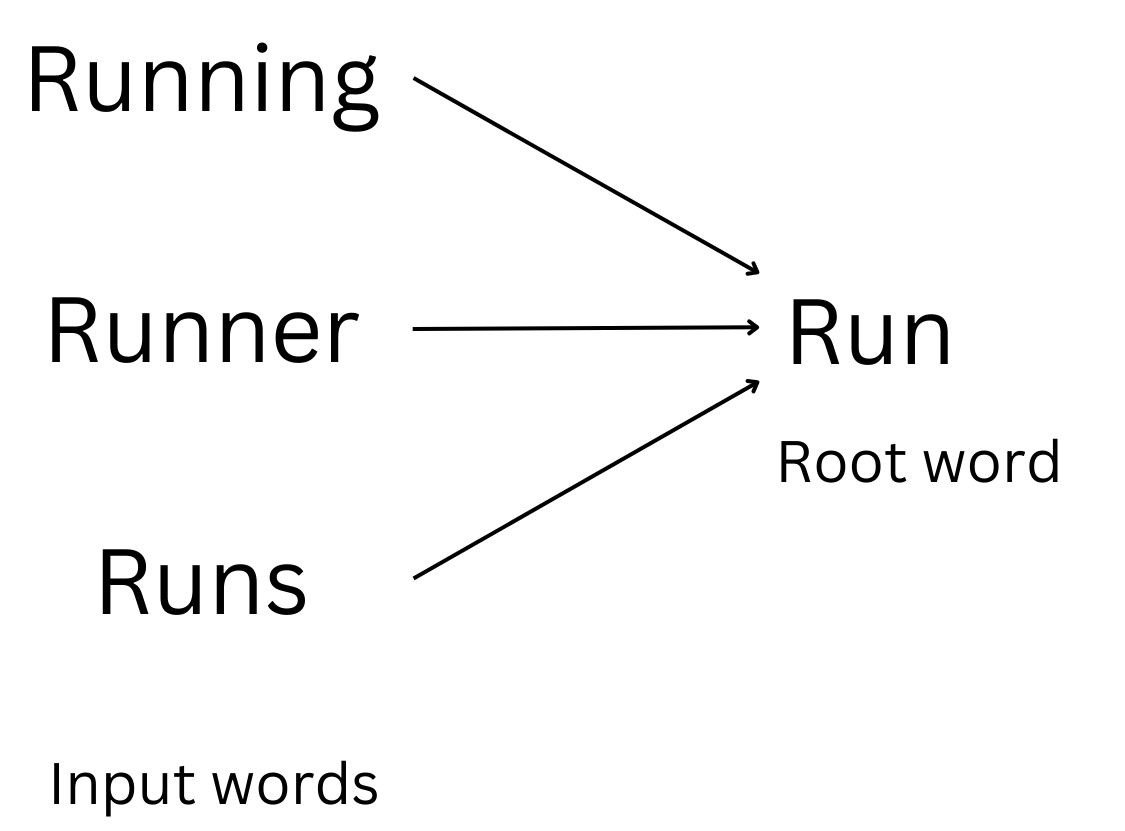

Stemming is a text normalization technique used in NLP to reduce the words to their base or root form. The idea is to remove affixes (prefixes or suffixes) from words so that variations of a word map to the same stem. For example, the words “running,” “runs,” and “runner” all stem from the base form “run.”

The process of stemming uses algorithms or a set of rules to remove the affixes. These rules are typically simple and sometimes result in invalid words, meaning it doesn’t always produce a linguistically correct root but tries to approximate it for computational purposes. For example, if you apply the stemming on word ability it will result in ‘abil’ that is meaningless.

Applications of Stemming

Stemming is often used in tasks like information retrieval, search engines, and text mining to reduce the vocabulary size and group together similar words.

Stemming Algorithms

Popular stemming algorithms include Porter Stemmer, Snowball Stemmer, and Lancaster Stemmer.

Here’s an example using the Porter-Stemmer algorithm:

Input word: “Running”

Stemmed word: “Run”

Implementation of Stemming

In this section, Porter and Lancaster stemmer are implemented using the NLTK library in Python.

import nltk

from nltk.stem import PorterStemmer, LancasterStemmer

# Initialize the stemmers

porter_stemmer = PorterStemmer()

lancaster_stemmer = LancasterStemmer()

# List of words to be stemmed

words = ["running", "ran", "runs", "easily", "fairly", "consistency", "consistent"]

# Applying Porter Stemmer

porter_stems = [porter_stemmer.stem(word) for word in words]

print("Porter Stemmer Results:")

print(dict(zip(words, porter_stems)))

# Applying Lancaster Stemmer

lancaster_stems = [lancaster_stemmer.stem(word) for word in words]

print("Lancaster Stemmer Results:")

print(dict(zip(words, lancaster_stems)))Output:

Porter Stemmer Results:

{'running': 'run', 'ran': 'ran', 'runs': 'run', 'easily': 'easili', 'fairly': 'fairli', 'consistency': 'consist', 'consistent': 'consist'}

Lancaster Stemmer Results:

{'running': 'run', 'ran': 'ran', 'runs': 'run', 'easily': 'easy', 'fairly': 'fair', 'consistency': 'consist', 'consistent': 'consist'}In the above implementation, the output is shown in the form of a dictionary where the ‘key’ represents the original word and the values in the dictionary represent the root or stemmed word.

The output of both the stemmers suggests that the Lancaster stemmer is more more aggressive and may produce less readable and short stems as compared to the Porter stemmer.

Lemmatization

Lemmatization is also a text normalization technique used to transform the words to their base words known as lamma. Compared to stemming lemmatization also uses the context and part of speech of a word to transform the words into valid words. It uses the knowledge of language to derive the base or root word.

Here’s an example of lemmatization:

Input word: “Running”

Lemma: “Run”

Lemmatization also considers the variations based on grammatical features like tense, gender, and number.

Lemmatization takes into account the grammatical structure and context of the sentence so it is more computationally intensive compared to stemming.

Applications of Lemmatization

It is commonly used in tasks where word accuracy and linguistic correctness are crucial, such as machine translation, sentiment analysis, and question-answering systems.

In summary, stemming and lemmatization are both techniques for reducing words to their base forms, but lemmatization produces valid words by considering linguistic rules and context while stemming uses simpler rules to approximate word roots without considering context or linguistic correctness.